The increasing prevalence of web scraping has expanded its usage areas considerably. Many AI applications today regularly feed their datasets with up-to-date data, often sourced through tools that scrape Google Images and other target websites. This creates a continuous and uninterrupted flow of data into artificial intelligence systems.

Image processing is one of the most popular areas in artificial intelligence applications. Image processing is a field of computer science that focuses on enabling computers to identify and understand objects and people in images and videos. Like other types of artificial intelligence, image processing aims to perform and automate tasks that replicate human capabilities. In this case, image processing tries to copy both the way people see and the way they make sense of what they see.

The data required for the development of algorithms and reduction of error margins in many fields, especially in image processing projects, is obtained by web scraping. In this article, we will develop an application that is frequently used in image processing projects. We will scrape and download Google Images with the Python programming language. So let’s get started.

Project Setup

First, let’s open a folder on the desktop. Let’s open a terminal in this file path and install the necessary libraries by running the command below.

| pip install requests bs4 |

After installing the necessary libraries, let’s create a file named ‘index.py’ in the folder.

Code

Let’s paste the following codes into the ‘index.py’ file we created.

| import requests, re, json, urllib.request from bs4 import BeautifulSoupheaders = { “User-Agent”: “Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/103.0.5060.114 Safari/537.36” }queryParameters = {“q”: “marvel”, “tbm”: “isch”, “hl”: “en”, “gl”: “us”, “ijn”: “0” }target_image_path = “.isv-r.PNCib.MSM1fd.BUooTd” targetted_image_base_html_path = “.VFACy.kGQAp.sMi44c.lNHeqe.WGvvNb”html = requests.get(“https://www.google.com/search”, params=queryParameters, headers=headers, timeout=30) soup = BeautifulSoup(html.text, “lxml”)google_images = []def scrape_and_download_google_images():images_data_in_json = convert_image_to_json() matched_image_data = re.findall(r’\”b-GRID_STATE0\”(.*)sideChannel:\s?{}}’, images_data_in_json) removed_matched_thumbnails = remove_matched_get_thumbnails(matched_google_image_data=matched_image_data) matched_resolution_images = re.findall(r”(?:’|,),\[\”(https:|http.*?)\”,\d+,\d+\]”, removed_matched_thumbnails) full_resolution_images = get_resolution_image(matched_resolution_images=matched_resolution_images) for index, (image_data, image_link) in enumerate(zip(soup.select(target_image_path), full_resolution_images), start=1): append_image_to_list(image_data=image_data, image_link=image_link) print(f'{index}. image started to download’) download_image(image_link=image_link, index=index) print(f'{index}. image successfully downloaded’) print(f’scraped and downloaded images: {google_images}’) def remove_matched_get_thumbnails(matched_google_image_data): def get_resolution_image(matched_resolution_images): def convert_image_to_json(): def append_image_to_list(image_data, image_link): def download_image(image_link, index): scrape_and_download_google_images() |

If we examine the codes, let’s first look at the fields with static values. The following fields are defined as static values. We specify the name and properties of the image we want to scrape and download with the queryParams variable.

| headers = { “User-Agent”: “Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/103.0.5060.114 Safari/537.36” }queryParameters = {“q”: “marvel”, “tbm”: “isch”, “hl”: “en”, “gl”: “us”, “ijn”: “0” }target_image_path = “.isv-r.PNCib.MSM1fd.BUooTd” targeted_image_base_html_path = “.VFACy.kGQAp.sMi44c.lNHeqe.WGvvNb”html = requests.get(“https://www.google.com/search”, params=queryParameters, headers=headers, timeout=30) soup = BeautifulSoup(html.text, “lxml”)google_images = [] |

The scrape_and_download_google_images() method is where the stream starts. targeted images are scraped and then downloaded to the folder we specified.

| def scrape_and_download_google_images():

images_data_in_json = convert_image_to_json() matched_image_data = re.findall(r’\”b-GRID_STATE0\”(.*)sideChannel:\s?{}}’, images_data_in_json) removed_matched_thumbnails = remove_matched_get_thumbnails(matched_google_image_data=matched_image_data) matched_resolution_images = re.findall(r”(?:’|,),\[\”(https:|http.*?)\”,\d+,\d+\]”, removed_matched_thumbnails) full_resolution_images = get_resolution_image(matched_resolution_images=matched_resolution_images) for index, (image_data, image_link) in enumerate(zip(soup.select(target_image_path), full_resolution_images), start=1): append_image_to_list(image_data=image_data, image_link=image_link) print(f'{index}. image started to download’) download_image(image_link=image_link, index=index) print(f'{index}. image successfully downloaded’) print(f’scraped and downloaded images: {google_images}’) |

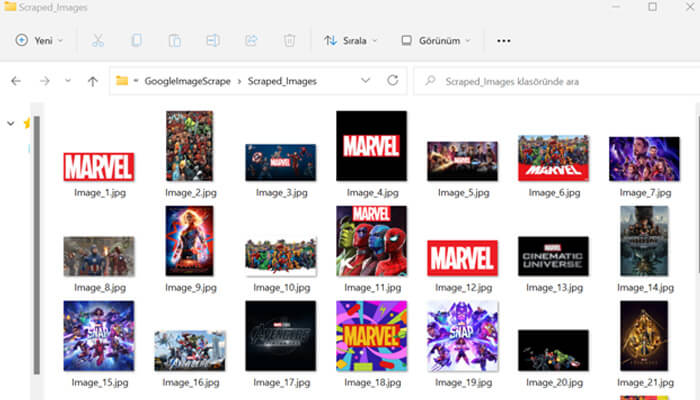

Downloading the scraped image to the folder is done in the download_image(image_link=image_link, index=index) method. The image scraped in this method is saved in the “Scraped_Images” that we previously added to the file location of the project.

Note: Create “Scraped_Images” folder in the project structure before running the application

| def download_image(image_link, index): opener=urllib.request.build_opener() opener.addheaders=[(‘User-Agent’,’Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/101.0.4951.54 Safari/537.36′)] urllib.request.install_opener(opener) urllib.request.urlretrieve(image_link, f’Scraped_Images/Image_{index}.jpg’) |

Run

To run the application, let’s open a terminal in the file location and run the following command.

| python index.py |

After the application runs, the following information is printed on the console of the application.

| 1. image started to download 1. image successfully downloaded 2. image started to download 2. image successfully downloaded 3. image started to download 3. image successfully downloaded 4. image started to download 4. image successfully downloaded 5. image started to download 5. image successfully downloaded 6. image started to download 6. image successfully downloaded 7. image started to download 7. image successfully downloaded […] 48. image started to download 48. image successfully downloadedscraped and downloaded images: [ { ‘image_title’: ‘Marvel Comics – Wikipedia’, ‘image_source_link’: ‘https://en.wikipedia.org/wiki/Marvel_Comics’, ‘image_link’: ‘https://upload.wikimedia.org/wikipedia/commons/thumb/b/b9/Marvel_Logo.svg/1200px-Marvel_Logo.svg.png’ }, { ‘image_title’: ‘Marvel Universe – Wikipedia’, ‘image_source_link’: ‘https://en.wikipedia.org/wiki/Marvel_Universe’, ‘image_link’: ‘https://upload.wikimedia.org/wikipedia/en/1/19/Marvel_Universe_%28Civil_War%29.jpg’ }, { ‘image_title’: ‘Marvel.com | The Official Site for Marvel Movies, Characters, Comics, TV’, ‘image_source_link’: ‘https://www.marvel.com/’, ‘image_link’: ‘https://i.annihil.us/u/prod/marvel/images/OpenGraph-TW-1200×630.jpg’ }, { ‘image_title’: ‘Marvel Entertainment – YouTube’, ‘image_source_link’: ‘https://www.youtube.com/c/marvel’, ‘image_link’: ‘https://yt3.ggpht.com/fGvQjp1vAT1R4bAKTFLaSbdsfdYFDwAzVjeRVQeikH22bvHWsGULZdwIkpZXktcXZc5gFJuA3w=s900-c-k-c0x00ffffff-no-rj’ }, { ‘image_title’: ‘Marvel movies and shows | Disney+’, ‘image_source_link’: ‘https://www.disneyplus.com/brand/marvel’, ‘image_link’: ‘https://prod-ripcut-delivery.disney-plus.net/v1/variant/disney/DA2E198288BFCA56AB53340211B38DE7134E40E4521EDCAFE6FFB8CD69250DE9/scale?width=2880&aspectRatio=1.78&format=jpeg’ }, { ‘image_title’: ‘An Intro to Marvel for Newbies | WIRED’, ‘image_source_link’: ‘https://www.wired.com/2012/03/an-intro-to-marvel-for-newbies/’, ‘image_link’: ‘https://media.wired.com/photos/5955ceabcbd9b77a41915cf6/master/pass/marvel-characters.jpg’ }, { ‘image_title’: ‘How to Watch Every Marvel Movie in Order of Story – Parade: Entertainment, Recipes, Health, Life, Holidays’, ‘image_source_link’: ‘https://parade.com/1009863/alexandra-hurtado/marvel-movies-order/’, ‘image_link’: ‘https://parade.com/.image/t_share/MTkwNTgxMjkxNjk3NDQ4ODI4/marveldisney.jpg’ }, { ‘image_title’: ‘Marvel Comics | History, Characters, Facts, & Movies | Britannica’, ‘image_source_link’: ‘https://www.britannica.com/topic/Marvel-Comics’, ‘image_link’: ‘https://cdn.britannica.com/62/182362-050-BD31B42D/Scarlett-Johansson-Black-Widow-Chris-Hemsworth-Thor.jpg’ }, { ‘image_title’: ‘Captain Marvel (2019) – IMDb’, ‘image_source_link’: ‘https://www.imdb.com/title/tt4154664/’, ‘image_link’: ‘https://m.media-amazon.com/images/M/MV5BMTE0YWFmOTMtYTU2ZS00ZTIxLWE3OTEtYTNiYzBkZjViZThiXkEyXkFqcGdeQXVyODMzMzQ4OTI@._V1_FMjpg_UX1000_.jpg’ }, […] ] |

Let’s look at the “Scraped_Images” file to check the downloaded images.

Conclusion

We did the scraping and downloading of Google Images, which was needed for some reason, with python. If you want to get the images you need from Google without writing any code, explore the Zenserp API. Here is its powerful and wonderful documentation that is constantly updated.